Camera networks are now ubiquitous and have applications in security, disaster response, environmental monitoring, and smart environments, among others. Recent technological advances have led to next-generation: real-time, distributed, embedded systems that perform computer vision tasks using multiple cameras. Bernhard Rinner’s research team and partners have proposed a computational framework that adopts the concepts of self-awareness and self-expression to more efficiently manage the complex tradeoffs among performance, flexibility, resources, and reliability. The IEEE Computer magazine features their findings in the July 2015 issue.

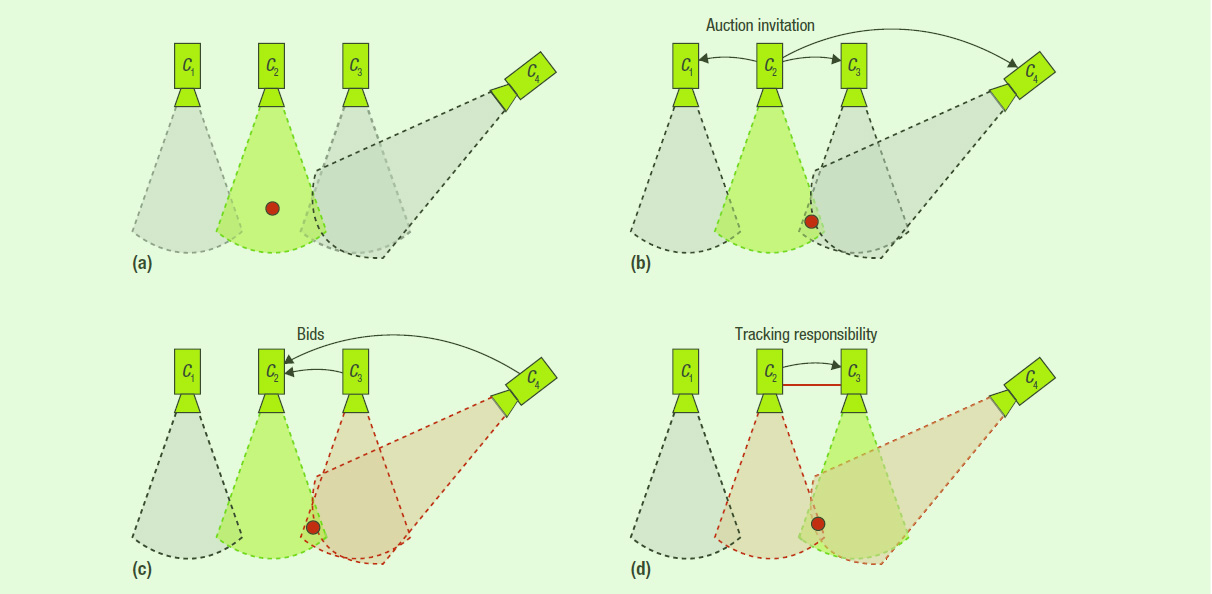

The concepts of self-awareness (SA) and self-expression (SE) are new to computing and networking. SA refers to a system’s ability to obtain and maintain knowledge about its state, behavior, and progress, whereas SE refers to the generation of autonomous behavior based on such SA. Together, SA and SE support effective and autonomous adaptation of system behavior to changing conditions.

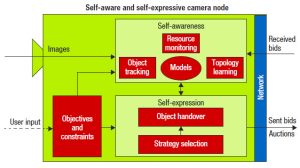

Our framework instantiates dedicated software components and their interactions to create a flexible self-aware and self-expressive multicamera tracking application. The figure depicts the architecture of an individual camera node, which consists of six building blocks—each with its own specific objectives—that interact with one another in the network. SA is realized through the object tracking, resource monitoring, and topology learning blocks. Instead of relying on predefined knowledge and rules, these blocks utilize online learning and maintain models for the camera’s state and context. The models serve as input to SE, which is composed of the object handover and the strategy selection blocks. Finally, the objectives and constraints block represents the camera’s objectives and resource constraints, both of which strongly influence the other building blocks.

Self-awareness and self-expression are fundamental concepts to developing camera networks capable of learning and maintaining their topology, performing distributed object detection and tracking handover, and autonomously selecting strategies to achieve more Pareto-efficient outcomes. In our proposed approach, all processing is encapsulated in six building blocks that are embedded in resource-limited smart camera nodes and aggregated into a completely decentralized and thus scalable network. However, computational SA and SE are not limited to camera networks. In fact, we are confident that these technologies could enable other types of systems and networks to meet a multitude of requirements with respect to functionality, flexibility, performance, resource usage, costs, reliability, and safety.