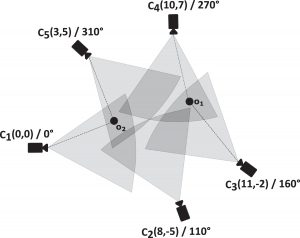

Many multi-camera applications rely on the knowledge of the spatial relationship among the individual nodes. However, establishing such a network-wide calibration is typically a time-consuming task and requires user interaction. In her recent work, Jennifer Simonjan developed a decentralized and resource-aware algorithm for estimating the poses of all camera nodes without any user interaction. “Self-calibration is achieved in two steps”, she explains. “First, overlapping camera pairs estimate relative positions and orientations by exchanging locally measured distances and angles to detected objects. Second, calibration information of overlapping cameras is spread throughout the network such that poses of non-overlapping cameras can also be estimated.”

Her PhD supervisor Bernhard Rinner points out: “This approach does not rely on a priori topological information and delivers the extrinsic camera parameters with respect to a common coordinate system.” Such network-wide calibration is important for many multi-camera applications. It helps to to automate the network setup and to account for topology changes during network operation. A fully decentralized approach was realized in order to support scalability and network dynamics. In the recently published journal paper, they perform a simulation study and analyze the performance of their approach concerning the achieved spatial accuracy and computational effort considering noisy measurements and different communication schemes.

Publication

Jennifer Simonjan and Bernhard Rinner. Decentralized and resource-efficient self-calibration of visual sensor networks. Ad Hoc Networks. 88: 212-228. 2019.